- Welcome to the Systematic community!

- Workato Pros

- Workato Pros Discussion Board

- Parsing a CSV and hitting the 50K row limit

- Subscribe to RSS Feed

- Mark Topic as New

- Mark Topic as Read

- Float this Topic for Current User

- Bookmark

- Subscribe

- Mute

- Printer Friendly Page

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-04-2024 06:55 AM

Is there a way to create multiple csv files to get around this limit of 50K records when using the Parse CSV action? I know there's a datapill to identify the list size but not sure how to set this up to only grab the first 50K records and parse them on the first file and then grab the next x amount of records and have them parsed on a second file.

Solved! Go to Solution.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-04-2024 09:11 PM

My understanding is 50k is not a hard limit, but the real limit is based on memory usage. I regularly use the CSV action on docs with 200k+ records without issue. You could also try using the SQL Collection, which is pretty much bulletproof when it comes to size limits.

If you really want to break it up into 50k chunks, I can provide some quick instructions after you confirm.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-22-2024 04:29 AM

Hi @matt-kruzicki ,

I've found a solution for chunking large files (over 50K records). I utilized Google Drive to store the large CSV file and implemented a simple Python script to achieve this task. You can refer to the recipe linked below for more details and clarification:

Recipe Link: Chunking the csv file which has more than 50K records

Let me know if you find this helpful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-22-2024 04:29 AM

Hi @matt-kruzicki ,

I've found a solution for chunking large files (over 50K records). I utilized Google Drive to store the large CSV file and implemented a simple Python script to achieve this task. You can refer to the recipe linked below for more details and clarification:

Recipe Link: Chunking the csv file which has more than 50K records

Let me know if you find this helpful.

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-25-2024 01:18 AM - edited 04-27-2024 01:52 PM

Hi, everyone!

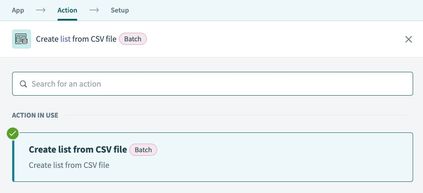

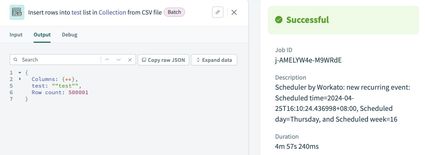

To handle CSVs with more than 50K rows, we suggest using the "Create list from CSV File" action via SQL Collection. It does not have a hard limit but will follow the platform-wide job timeout limit.

Here's a test on a CSV with 500k rows :

- Mark as New

- Bookmark

- Subscribe

- Mute

- Subscribe to RSS Feed

- Permalink

- Report Inappropriate Content

04-25-2024 07:53 PM

This is the better option instead of chunking the file.